Data lake What is a data lake?

A data lake is a repository where data is ingested in its original form without alteration. Unlike data warehouses or silos, data lakes use flat architecture with object storage to maintain the files’ meta data. It is most useful when it is part of a greater data management platform and integrates well with existing data and tools for more powerful analytics. The goal is to uncover insights and trends while being secure, scalable, and flexible.

Table of Contents

Data lakes explained

A data lake is used to hold a large amount of data in its native, raw format in a central location—typically the cloud. By leveraging inexpensive object storage, open formats, and cloud scalability, a variety of applications can take advantage of the wealth of data contained in a data lake.

- All types of qualitive data, including unstructured (often called big data) and semi-structured data can be stored—which is critical for today’s machine learning and advanced analytics use cases.

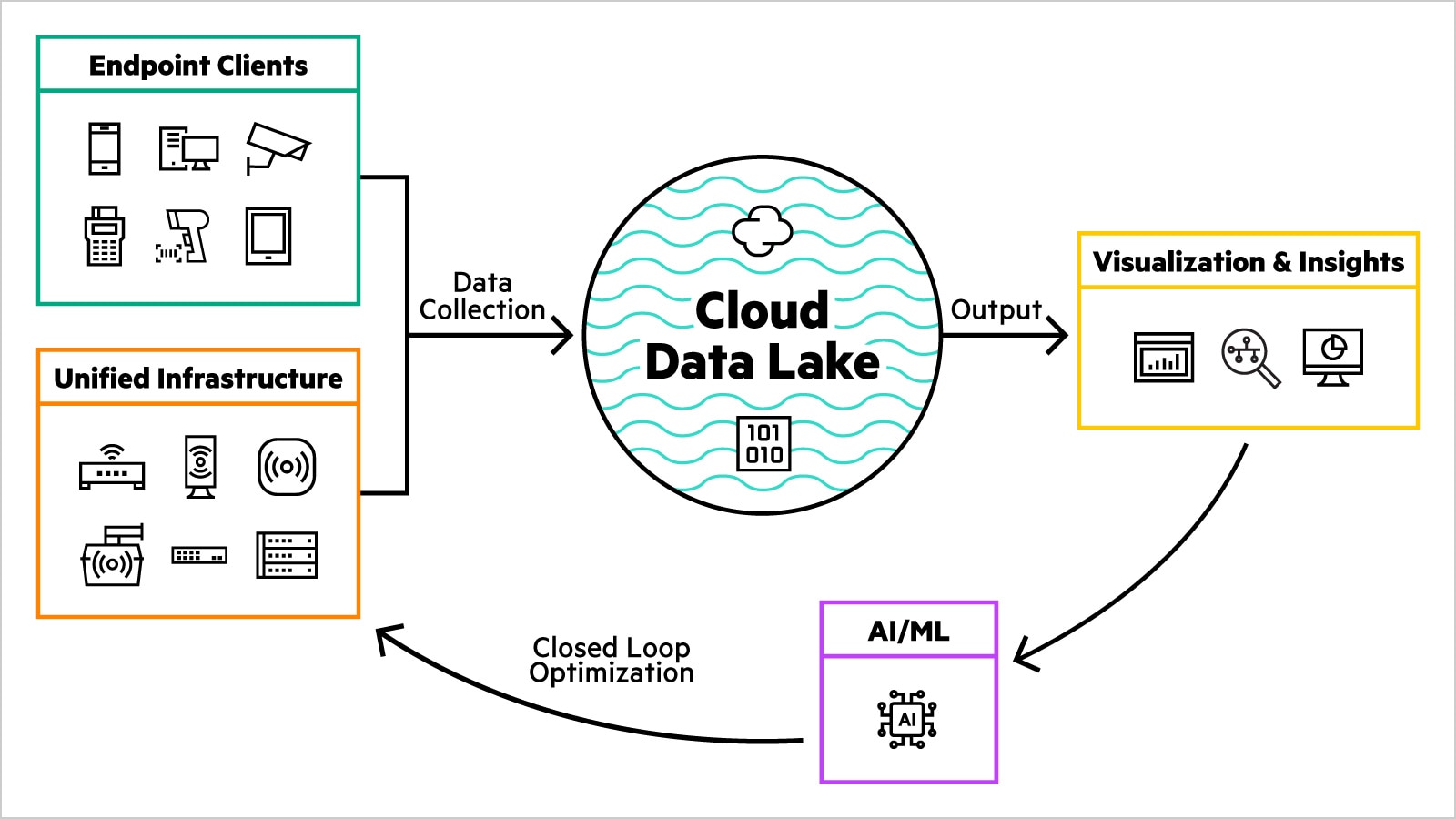

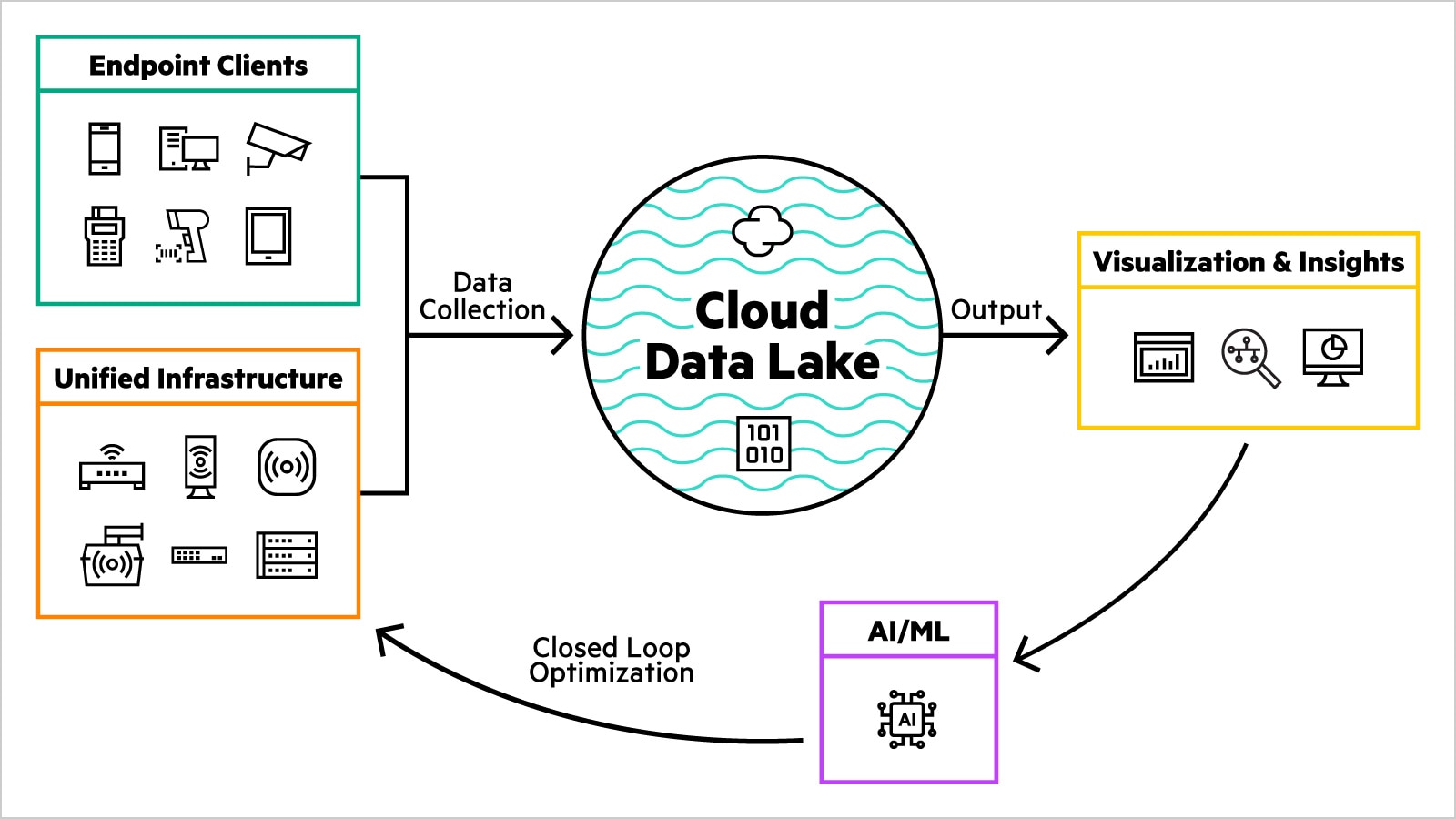

- In the networking space, think of infrastructure and endpoint telemetry being used as descriptors or classifiers that feed AI/ML models and algorithms to identify baselines and anomalies.

- As a customer, your infrastructure and endpoint clients feed the data lake, and your networking vendor maintains it to deliver AI-based tools that help IT operate your network more efficiently.

Why do organizations choose data lakes?

Data lakes enable enterprises to transform raw data into structured data ready for SQL-based analytics, data science, and machine learning but with lower latency. All types of data are more easily collected and retained indefinitely, including streaming images, video, binary files, and more. Since the data lake is responsive to multiple file types and a “safe harbor” for new data, it’s more easily kept up to date.

With this kind of flexibility, data lakes enable users with all different skillsets, locales, and languages to perform the tasks they need. When contrasted with the data warehouses and silos that data lakes have effectively replaced, the flexibility they provide to Big Data and machine learning applications are increasingly self-evident.

Benefits of a data lake

Data lake customer benefits include:

- Dynamic baselines for their site’s network performance without manually setting SLEs.

- Comparisons that highlight where similar sites are seeing issues based on their own data.

- Optimization tips based on the performance data of a similar customer site’s behavior.

- A constant retraining of AI/ML as new technology, infrastructure, and endpoints emerge.

Data lake vs. data warehouse

While both data lakes and warehouses can be used for storing large amounts of data, there are several key differences in the ways that data can be accessed or used. Data lakes store raw data of literally any file type. Alternatively, a data warehouse stores data that has already been structured and filtered for a specific purpose.

With their open format, data lakes do not require a specific file type, nor are users subject to a proprietary vendor lock-in. One advantage of data lakes over silos or warehouses is the ability to store any type of data or file, compared to a more structured environment. Another is that the intention behind setting up a data lake need not be defined at the time, whereas a data warehouse is created as a repository for filtered data that has already been processed with a specific intention.

A centralized data lake is favorable over silos and warehouses because it eliminates issues like data duplication, redundant security policies, and difficulty with multi-user collaboration. To the downstream user, a data lake appears as a single place to look for or interpolate multiple sources of data.

Data lakes are also, by comparison, very durable and economical due to their scalability and ability to leverage object storage. And since advanced analytics and machine learning with unstructured data are an increasing priority with many businesses today, the ability to “ingest” raw data in structured, semi-structured, and unstructured formats makes data lakes an increasingly popular choice with data storage.

What are data lake platforms?

Virtually all major cloud services providers offer modern data lake solutions. On-premises data centers continue to use the Hadoop File System (HDFS) as a near-standard. As enterprises continue to adopt the cloud environment, however, numerous options are available to data scientists, engineers, and IT professionals looking to leverage the enhanced possibilities of moving their data storage to a cloud-based data lake environment.

Data lakes are particularly helpful when working with streaming data, such as JSON. The three most typical business use cases are business analytics or intelligence, data science focused on machine learning, and data serving—high-performance applications that depend on real-time data.

All major cloud-service providers, from Amazon Web Services (AWS) to Microsoft Azure to Google BigQuery, offer the storage and services necessary for cloud-based data lakes. Whatever level of integration an organization is looking for, from simple backup to complete integration, there is no shortage of options.

How are data lakes used today?

Compared to just two or three decades ago, most business decisions are no longer based on transactional data stored in warehouses. The sea change from a structured data warehouse to the fluidity of the modern data lake structure is in response to changing needs and abilities of modern Big Data and data science applications.

Though new applications continue to emerge on an almost-daily basis, some of the more typical applications for the modern data lake are focused on fast acquisition and analysis of new data. For example, a data lake is able to combine a CRM platform’s customer data with social media analytics, or a marketing platform that can integrate a customer’s buying history. When these are combined, a business can better understand potential areas of profit or the cause of customer churn.

Likewise, a data lake enables research and development teams to test hypotheses and assess the results. With more and more ways to collect data in real time, a data lake makes the storage or analysis methods faster, more intuitive, and accessible to more engineers.

HPE and data lakes

Big Data is how businesses today tackle their biggest challenges. Where Hadoop has been successful in distilling value from unstructured data, organizations are looking for newer, better ways to simplify the way they do it.

Today’s businesses make enormous expenditures in analytics—from systems to data scientists to their IT workforce—to implement, operate, and maintain their on-premises Hadoop-based data management. As with any data environment, needs in capacity can change exponentially.

GreenLake offers organizations a truly scalable, cloud-based solution that can fundamentally simplify their Hadoop experience, eliminating complexity and cost and instead focusing on gaining the insights that the data provides. GreenLake offers a complete end-to-end solution with hardware, software, and HPE Services.

By maximizing the potential of your data, GreenLake takes full advantage of the HDFS data lake already contained in the on-premises environment, while leveraging the advantages and insights offered in the cloud.